Data Center In A Box

From WBITT's Cooker!

Few weeks ago, I was thinking to make an HPCC in a box for myself, based on the idea given by Douglas Eadline, on his website [[1]]. Later, while dealing with some problems of one of my clients, I decided to design an inexpensive chassis, which could hold in-expensive "servers" . My client's setup required multiple Xen/KVM hosts running x number of virtual machines. The chassis is to hold everything, from the cluster mother-boards, to their power supplies, network switches, power cables, KVM, etc. Currently our servers are rented from various server renting companies. But, managing them remotely, and particularly the cost of a SAN slice and the private network requirements, was sending us off the edge. So I thought, why not design something, which I could place anywhere in the world, (where I am living), and do everything from the comfort of my home! (or my own office! ? )

For most of you, this would definitely sound like re-inventing the wheel. True. It is. However, the wheels I know of, (from major players, such as Dell, IBM. HP, etc), are too expensive for a small IT shop, such as my client (and even myself!). Thus, this is an attempt to re-invent the wheel, but an "in-expensive" one. The one which everyone can afford. The solution, which can use COTS (Common Of The Shelf) components and utilize FOSS (Free/Open Source Software), and yet can deliver the computational power necessary to perform certain tasks, while consuming less electricity,... and less cooling.

Here are the design goals of this project:

Contents |

Project (Design) Goals

- Utilize in-expensive and un-branded COTS (Common Off The Shelf) components. e.g. Common ATX or uATX motherboards, common Core2Duo or Core2Quad processors, etc.

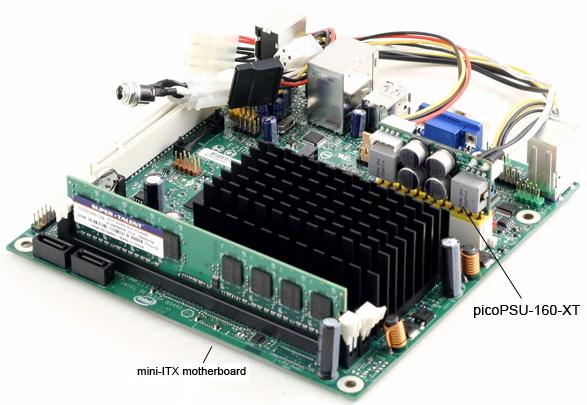

- In some situations Mini-ITX motherboards can also be used. The mini-ITX motherboard has hole-placement matching to that of the ATX motherboard. I am still skeptical, that what processors they support, how much RAM they support and most importantly, their availability. ATX and uATX are more commonly available all over the world, compared to mini-ITX. Unless they support 4GB of RAM, and a good processor, they are not-suitable for the Virtualization projects. The cost can be low, but the performance would probably be un-bearable.

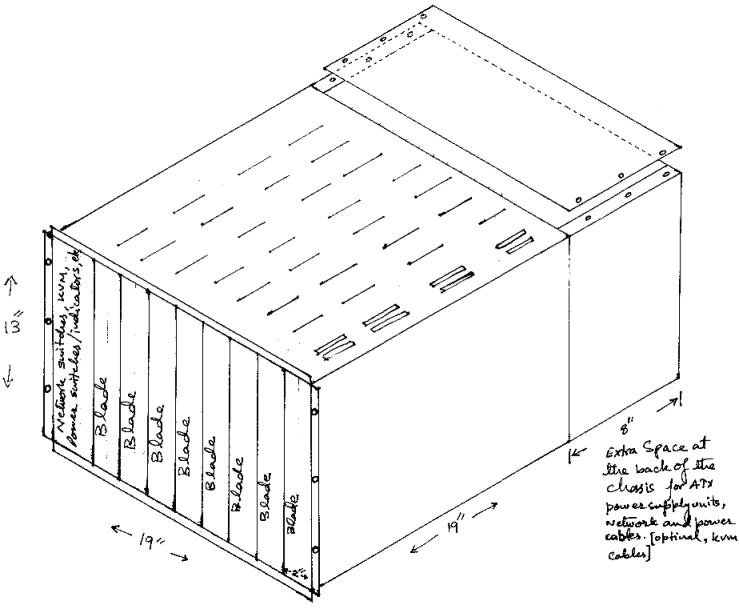

- Should not take more than 19" x 19" on the desk/ floor.

- Being 19" wide, it should have ability to be placed in any server rack. The height is 14", which is 8U in terms of rack space.

- Use low power CPUs on the mother-boards, so less power would be needed. (At the moment, I know of 45 Watt CPUs and 65 Watt CPUs). Ideally the whole chassis should consume less than 100 Watts when idle, and not more than 800-1000 Watts when loaded. (These are starting figures. Will change based on further calculations / data).

- Should have power supply efficiency above 80% , so less heat is generated by the PSU

- While being low power would mean that it would need less cooling.

- Try using in-expensive USB (zip/pen/thumb) drives to boot the worker nodes. Can also utilize PXE booting from a central NFS server.

- All network switches and KVMs with their cables will be placed inside the chassis. This would essentially result in only one main power cable coming out of the chassis, and a standard RJ45 port to connect to the network.

- Expand as you go. Means, you can start with a couple of blades. And increase capacity when needed.

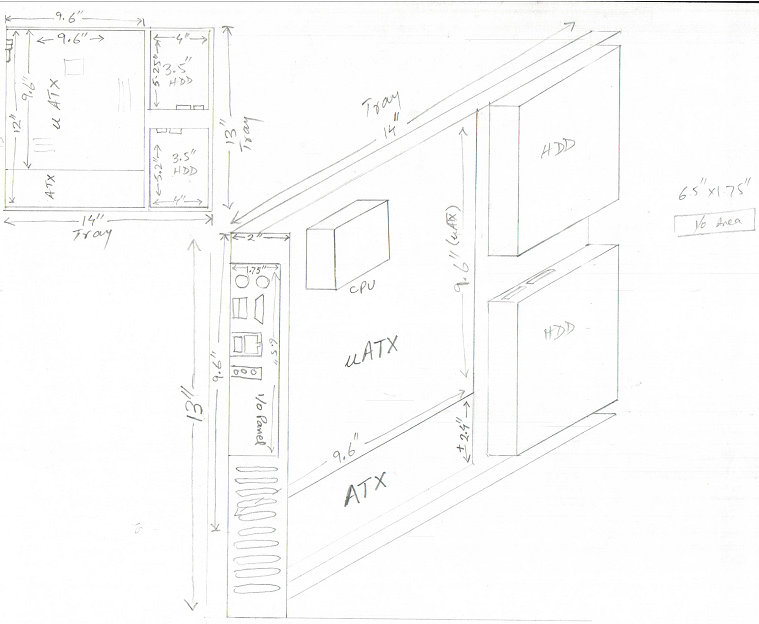

- The tray which holds the motherboard will support standard ATX, Mini ATX and Micro ATX boards, without any modification. That means, you can have a mix and match of various form factors of ATX motherboards, depending on your requirements. This also provides options for upgrade-ability. See next point.

- The boards will support both Intel Core2Duo and Core2Quad processors. Similarly in case you are AMD fan, such boards would be used which would support the latest models of AMD's desktop processors. This means, that processors can be upgraded when needed. In other words, you can start with Core2Duo for example, and later upgrade to Core2Quad.

- Maintenance is designed to be very easy. Just pull the blade, plug out it's power connections and network connections, replace whatever is faulty, or upgrade and slide it back in.

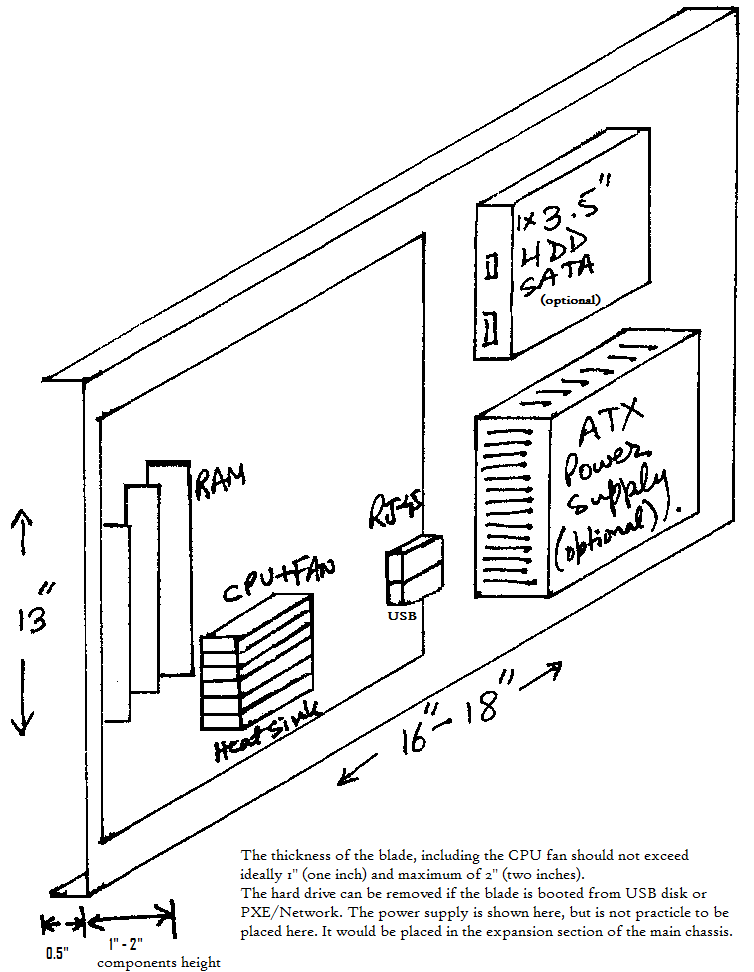

- The "thickness" of the blade is thought to be 1" in maximum, including CPU heat sinks/ fans. If this is do-able, the chassis density can be increased to holding 16 blades! . If 1" cannot be achieved, (mainly because of the size of the CPU heat-sink), then the thickness must not exceed 2" .

- Air flow will be provided to the chassis through large 4" low RPM, brush-less fans, mounted in front of the chassis (not shown). A "fan-tray" is thought to be placed on both front and back of the chassis.

- Successful attempt of fan less, low-rise CPU heat sinks and low RPM "quiet" fans should have a noise signature that is acceptable in a modern office environment (around 45dB).

Software components

- Linux - For both HPCC and Virtualization utilizations/implementations. (RHEL, CentOS, Fedora, Scientific Linux, Debian)

- Apache, MySQL, PostgreSQL, PHP, for various web hosting needs

- Cobbler for node provisioning

- PDSH, XCAT

- Virtualization Software: XEN or KVM

- HPCC software: PVM, MPICH, MPICH2, LAM/MPI, OpenMPI, ATLAS, GotoBLAS, Torque/OpenPBS

- OpenFiler as the central storage server

- DRBD, HeartBeat, ipvs, ldirectord, etc for various high availability requirements.

- Monitoring tools - MRTG, Ganglia, Nagios, ZenOSS

Project benefits/utilization

- Ideal for both HPCC and Virtualization setups.

- Small IT shops who want to run their own web/db/cache/firewalls and don't want to waste a lot of money, power and space for it.

- Ideal for training institutes and demonstration units.

- Low power consumption when idle.

- Efficient space usage.

- Replaceable parts, fully serviceable.

- Fewer cables.

- Plug and play. i.e. Just connect the enclosure to power and network, and that is it.

- Expandable as per your budget/pocket. (Increased number of nodes)

- Upgrade nodes which need an upgrade. (Increase RAM from 1GB to 2GB, or from 2 GB to 4 GB. Upgrade from Core2Duo to Core2Quad, etc).

The basic designs of the chassis and the blade itself are produced below. I drew them by hand, so pardon any ink smears. I hope I can ask someone to draw them for me in some CAD system.

Diagrams (hand drawn)

The one below is a pencil drawing and is not very clear. In this design, I have rotated the motherboard 180 degrees, and brought the I/O panel to the front. This is a much efficient design in my opinion. I will in-fact save a lot of in-box KVM wiring. I will also be saved from any trouble of bringing any ports manually to the front panel. According to this design, I just have to bring a Power-Status LED and Power Switch.

Components

These are the components which I intend to use. The actual components used may vary depending on availability and further study / analysis of the blade design.

Power Supply Units (PSU) for the main enclosure

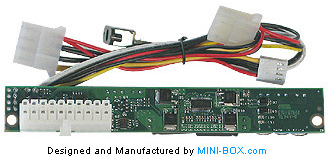

Power Supply Units (PSU) for the motherboards

I am interested in last two in the list below:

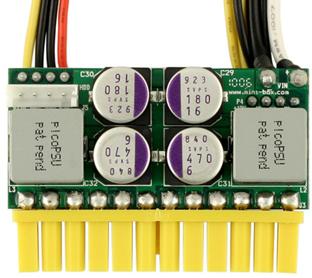

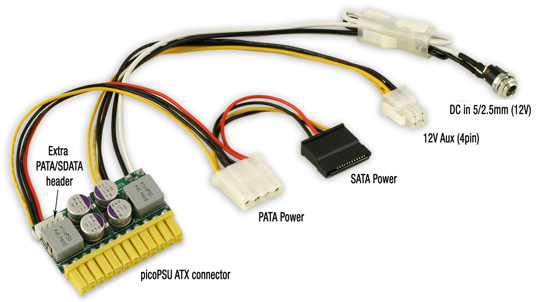

- http://www.mini-box.com/s.nl/it.A/id.417/.f?sc=8&category=13

- http://www.mini-box.com/picoPSU-160-XT

- http://www.itx-warehouse.co.uk/Product.aspx?ProductID=754

- http://www.alibaba.com/product-gs/263842405/200W_12V_24pin_MINI_ITX_Pico.html

- http://www.mini-box.com/PW-200M-DC-DC-power-supply

- http://www.mini-box.com/s.nl/it.A/id.301/.f

Processors

I am mainly interested in Core2Duo and Core2Quad Processors from Intel. And, Athlon x2 64, and Phenom/Phenom II from AMD.

Note: TDP = Tehermal Design Power [[2]]

For Intel, the following URL was helpful: http://ark.intel.com/ProductCollection.aspx?familyId=26548

- Core 2 Duo Processors (35W TDP) [2Cores/2Threads] Core2Duo T6670 - T9900

- T9900 (6M Cache, 3.06 GHz, 1066 MHz FSB)

- T9800 (6M Cache, 2.93 GHz, 1066 MHz FSB)

- T9600 (6M Cache, 2.80 GHz, 1066 MHz FSB)

- T9550 (6M Cache, 2.66 GHz, 1066 MHz FSB)

- T9500 (6M Cache, 2.60 GHz, 800 MHz FSB)

- T9400 (6M Cache, 2.53 GHz, 1066 MHz FSB)

- T9300 (6M Cache, 2.50 GHz, 800 MHz FSB)

- T8300 (3M Cache, 2.40 GHz, 800 MHz FSB)

- T8100 (3M Cache, 2.10 GHz, 800 MHz FSB)

- T7800 (4M Cache, 2.60 GHz, 800 MHz FSB)

- T7700 (4M Cache, 2.40 GHz, 800 MHz FSB)

- T7600 (4M Cache, 2.33 GHz, 667 MHz FSB)

- T7500 (4M Cache, 2.20 GHz, 800 MHz FSB)

- T7400 (4M Cache, 2.16 GHz, 667 MHz FSB)

- T7300 (4M Cache, 2.00 GHz, 800 MHz FSB)

- T7250 (2M Cache, 2.00 GHz, 800 MHz FSB)

- T7200 (4M Cache, 2.00 GHz, 667 MHz FSB)

- T7100 (2M Cache, 1.80 GHz, 800 MHz FSB)

- T6670 (2M Cache, 2.20 GHz, 800 MHz FSB)

Note: Core2 Duo T6600 and below, does not support Virtualization Technology.

- Core 2 Quad (Desktop) Processors (65W TDP) [4Cores/4Threads] [These processors are not available in 35W TDP]

- Q9550S (12M Cache, 2.83 GHz, 1333 MHz FSB)

- Q9505S (6M Cache, 2.83 GHz, 1333 MHz FSB)

- Q9400S (6M Cache, 2.66 GHz, 1333 MHz FSB)

- Q8400S (4M Cache, 2.66 GHz, 1333 MHz FSB)

- Q8200S (4M Cache, 2.33 GHz, 1333 MHz FSB)

Note: Intel has recently launched Core i3 and i5 processors, which are essentially a re-branding of Core2Duo, with lower TDP. However, their price is too high at the moment and thus I am not considering them for this project.

Read this, if you think that Intel Atom processors can be used in Data-Centers [[3]].

Processor heat-sink / fan / cpu-cooler

This is probably the most important component in this entire setup. I want to use a CPU cooling solution which can keep the total thickness of the blade within 1.75". This would mean that I need larger, low-rise cpu heat-sinks, with very thin CPU fans. I do not wish to use CPU fans in the first place, but I may use them, if the processor cannot handle the heat, with heat-sink alone. In my opinion, the Core2Duo may handle it's heat without a fan, provided there is enough air-flow is provided from the front of the chassis. Core2Quad and AMD CPUs must use fan based cooling solutions.

I am unable to find my dream cooling solution till now in the local market here in Saudia. And I am sure that I am will never find it here. [For obvious reasons].

Here is what I found! The low profile CPU coolers :-

| Cooler Name | Supported Processor | Socket | HeatSink Dimensions | Fan Dimensions | Total Height | Noise | Price | URL |

|---|---|---|---|---|---|---|---|---|

| Cooler Master 1U CPU Cooler (E1N-7CCCS-06-GP) | Pentium 4, Pentium D, Core 2 Duo, Core 2 Quad | LGA 775 | 93 x 93 x 27.5 mm | 70 x 70 x 13 mm | 40.5mm | 40 dBA | $40.99 | [[4]] |

| Silverstone (SST-NT07-775) Slim Profile CPU Cooler | Core 2 DUO E8000/E7000 series, Pentium Dual-Core E5000 series, Celeron D 400 Celeron E1000 series | LGA 775 | W/Fan: 93mm (W) x 36.5mm (H) x 93mm (D) | 37mm | 15~23dBA | $19.99 | [[5]] | |

| Thermolab Nano Silencer TLI-U | LGA 775 | W/Fan Dim: 45x85x96mm | 45mm | 48.3 dB | $32 | [[6]] | ||

| GELID Solutions Slim Silence 775 1U Low Profile Heatpipe Cooler | Dual - Core and core2Duo with TDP up to 65W with good case ventilation. | LGA 775 | W/Fan: 95 (l) x 93.5 (w) x 28 (h) | 28mm | 15 - 24.5 | $21.95 | [[7]] | |

| CPU Cooler Slim Silence AM2 | AMD 2650e, 3250e, 4850e, 5050e and others with low power consumption up to 65W (TDP) with good case ventilation | AM2, AM2+, AM3 | W/Fan: 105 (l) x 74.5 (w) x 28 (h) | 28mm | 15 - 27.2 | $21 | [[8]] | |

| Akasa AK-CCE-7106HP | Core 2 Duo, Core i3 and Core i5 (73W) CPU’s | LGA 775, LGA 1156 | W/Fan: 87.2 x 85.2 x 29.5 mm | 30mm | 18.9 - 31.9 dB(A) | $15 | [[9]] | |

| Akasa AK-CCE-7107BS | LGA775: Core2Duo, Core2Quad & Xeon, LGA1156: Core i3, Core i5, Core i7 & Xeon | LGA775 & LGA1156 | 87 x 91 x 30.5 (H) mm | 75 x 75 x 15 mm | 45.5mm | 41.65 dB(A) | ? | [[10]] |

| Akasa AK-CC029-5 | Core 2 Duo, Core 2 Quad & LGA775 Xeon | LGA 775 | 87 x 91 x 30.5 (H) mm | 75 x 75 x 15 mm | 45.5mm | 41.65 dB(A) | $25 | [[11]] |

| Akasa AK-CC080CU | Core 2 Duo processors | LGA775 | W/Fan: 87.2 x 85.2 x 29.5 mm | 30mm | 18.9 - 31.9 dB(A) | $15 | [[12]] | |

| Akasa AK-CC080AL | Core 2 Duo processors | LGA 775 | W/Fan: 87.2 x 85.2 x 29.5 mm | 30mm | 18.9 - 31.9 dB(A) | $15 | [[13]] | |

| Akasa AK-CC044T | Core 2 Duo processors | LGA 775 | 95 x 95 x 35 mm | 70 x 70 x 15 mm | 50mm | 29.1 - 46.5 dB(A) | $15 | [[14]] |

| Akasa AK-CC044B | Core 2 Duo processors | LGA 775 | 95 x 95 x 30.9mm | 70 x 70 x 10 mm | 40.9mm | 23.38 dB(A) | $15 | [[15]] |

| Fanless-:- | ||||||||

| Cooljag OAK-B5 1U Server Fanless CPU Cooler | ? | LGA 775 | 92L x92Wx27H(mm) | 27mm | N/A | $37.95 | [[16]] | |

| Akasa AK-395-2 | Intel LGA775 P4, Core2Duo, Core2Quad & Xeon | LGA 775 | 84 x 84 x 27 (H) mm | 27mm | N/A | $23 | [[17]] |

Motherboards

I am interested primarily in Micro-ATX motherboards. Other boards which I am willing to take in are ATX. I would probably not touch/discuss mini-ITX , mainly because of it's un-availability in parts of the world, where I want people to use Data Center In a Box concept in the first place.

Mini-ITX has following major disadvantages:

- They are not commonly available in all parts of the world.

- They (normally) have their own type of processors, which are installed on them. They are also difficult to get in all parts of the world. Some motherboards "do" support Core2Duo processors too, but that is not a common case.

- Their processors have (generally) low compute power. Thus most of the time not suitable to run virtualizaiton stuff. Though some of them does support VT enabled CPUs. Interestingly, people have used them for compute intensive jobs too, such as [[18]] , [[19]], and very useful scientific project, such as [[20]] .

- The amount of RAM that can be installed on these boards is also limited, in rare cases, it can go to as high as 4GB.

In my opinion, if you are not using virtualization, then using mini-ITX can in-fact be advantageous over other motherboards. They consume very small amount of power and dissipate very less heat. The following are some of the advantages of using Mini-ITX, in my opinion:

- As a low cost, low power Beowulf/HPCC cluster. As seen here: http://mini-itx.com/23333525

- As a low cost, (yet efficient) web server farm

- A Render farm

- A (MySQL, PostgreSQL) DB cluster

- Creating your custom Routers and Firewall devices. (In-fact, I am thinking to use it in my Data Center In a Box project to setup firewall/router !!!) [If I can find one, that is! :]

- Creating your own SAN/NAS solutions, using BOD (Bunch Of Disks) and FOSS software, e.g. openfiler. (Rather, this, I believe is going to be my next project).

- and lot of other crazy stuff ....

With that said, lets put mini-ITX aside, for now, because my goal is to have a data center, which would run virtual machines for the work load.

I have found the following very helpful in finding the dimensions of the mother-boards, the mounting holes location, etc.

- From http://www.formfactors.org/, I got ATX specification (rev 2.2) [[21]] and Micro-ATX specification (rev 1.2) [[22]]

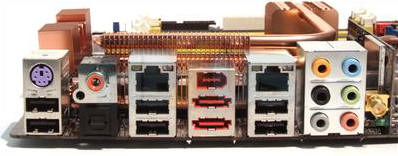

- I also found out that the back panel , aka, I/O panel plate, is 6.5" x 1.75" .

Similar projects by others

- http://limulus.basement-supercomputing.com/ [[23]]

- FAWN (A Fast Array of Wimpy Nodes) [[24]]

- Mini Cluster from http://mini-itx.com [[25]]

- http://mini-itx.com/23333525 and http://ainkaboot.co.uk/octimod.php

- http://www.linuxfordevices.com/c/a/News/64way-miniITX-cluster-targets-classrooms-offices/

- https://oxynux.org/wiki/projects/cluster/start