Libvirt overwrites the existing iptables rules

From WBITT's Cooker!

m (→Objective / goal of this document) |

(Redirected page to XEN, KVM, Libvirt and IPTables) |

||

| (72 intermediate revisions not shown) | |||

| Line 1: | Line 1: | ||

| - | + | #REDIRECT [[XEN, KVM, Libvirt and IPTables]] | |

| - | + | ||

<b> DISCLAIMER: This document may contain text, which is horribly wrong. This page is just my scratch pad for my personal notes, on this beast. So until I get it right, you are advised not to read anything in this document. It may shatter your existing iptables/virtualization concepts. READ AND/OR USE AT YOUR OWN RISK.</b> | <b> DISCLAIMER: This document may contain text, which is horribly wrong. This page is just my scratch pad for my personal notes, on this beast. So until I get it right, you are advised not to read anything in this document. It may shatter your existing iptables/virtualization concepts. READ AND/OR USE AT YOUR OWN RISK.</b> | ||

| Line 27: | Line 26: | ||

* Routed Network | * Routed Network | ||

| - | In this paper, we would focus on NAT based virtual networks (virbr0). We would also be using the terms XEN and KVM interchangeably, because we are using CENTOS to analyse the behaviour of virtual networks; and both XEN and KVM in our case, are available by default, on our CENTOS platform. On CENTOS, they both use the same virtualization API (libvirt) to manage the VMs, even though the underlying technology of both are different. Thus, the behaviour of the VMs, or the "feel" of the VMs, (so to speak), is same on both XEN and KVM. XEN is | + | In this paper, we would focus on NAT based virtual networks (virbr0). We would also be using the terms XEN and KVM interchangeably, because we are using CENTOS to analyse the behaviour of virtual networks; and both XEN and KVM in our case, are available by default, on our CENTOS platform. On CENTOS, they both use the same virtualization API (libvirt) to manage the VMs, even though the underlying technology of both are different. Thus, the behaviour of the VMs, or the "feel" of the VMs, (so to speak), is same on both XEN and KVM. XEN is para-virtualization technology, and KVM is hardware-assisted virtualization technology. |

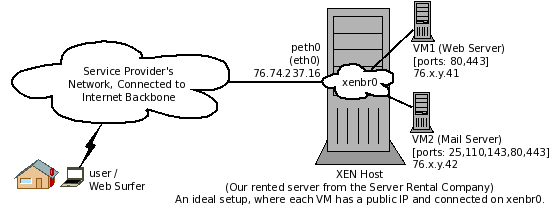

For service hosting purposes, it is best to use the shared device model. In this model, all VMs, share the same physical network card of the host, as well as the IP scheme, to which, the physical network card of the physical host is connected to. This would mean, of-course to have more available IPs of the same network scheme, so they can be assigned to the VMs. In this way, all VMs appear on the public network in the same way, as if they were just another physical server. This is the easiest way to connect your VMs to your infrastructure, and make them accessible. (We have labelled it as Rich-Man's setup, in the solutions section below). | For service hosting purposes, it is best to use the shared device model. In this model, all VMs, share the same physical network card of the host, as well as the IP scheme, to which, the physical network card of the physical host is connected to. This would mean, of-course to have more available IPs of the same network scheme, so they can be assigned to the VMs. In this way, all VMs appear on the public network in the same way, as if they were just another physical server. This is the easiest way to connect your VMs to your infrastructure, and make them accessible. (We have labelled it as Rich-Man's setup, in the solutions section below). | ||

| Line 44: | Line 43: | ||

===The Problem=== | ===The Problem=== | ||

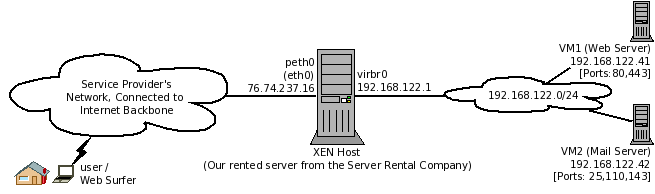

| - | The problem is faced, when a virtual machine (on the private network) needs to be accessed from the outside-network, instead of a virtual machine accessing the outside-network. <i>[Note the difference, here]</i>. The virtual machine in discussion is on a NATed, (non- | + | The problem is faced, when a virtual machine (on the private network) needs to be accessed from the outside-network, instead of a virtual machine accessing the outside-network. <i>[Note the difference, here]</i>. The virtual machine in discussion is on a NATed, (non-route-able) <b>private network</b>, inside a XEN or KVM host, <b>connected to virbr0 interface of the physical host</b>. Naturally, the VMs are hidden behind the physical host they are hosted on. And the only way to access such VMs is to go though the public interface/IP of the physical host. This is becoming a common case, as more and more people have started utilizing this technology on their already existing infrastructure. |

Consider a physical web server, located in any of the data centers, of a server rental company, connected directly to the internet, with a public IP. The administrator of the server has noticed that the server hardware resources are hardly being used by the web service. Most of the resources, mainly CPU is free 90-95% of time. The administrator of the server wants to setup small virtual machines, inside this physical server. The new VMs can take any role, such as, but not limited to: web server, mail server, database server, monitoring server, or even a firewall. At the same time, the server administrator either does not want to, or cannot afford to, pay for additional public IPs. In such a case, the administrator has no choice but to setup the VMs on a private network on the physical host itself, and use some traffic forwarding mechanism to redirect the traffic he is interested in, from the physical network card of his server, to each virtual machine. | Consider a physical web server, located in any of the data centers, of a server rental company, connected directly to the internet, with a public IP. The administrator of the server has noticed that the server hardware resources are hardly being used by the web service. Most of the resources, mainly CPU is free 90-95% of time. The administrator of the server wants to setup small virtual machines, inside this physical server. The new VMs can take any role, such as, but not limited to: web server, mail server, database server, monitoring server, or even a firewall. At the same time, the server administrator either does not want to, or cannot afford to, pay for additional public IPs. In such a case, the administrator has no choice but to setup the VMs on a private network on the physical host itself, and use some traffic forwarding mechanism to redirect the traffic he is interested in, from the physical network card of his server, to each virtual machine. | ||

| Line 65: | Line 64: | ||

The objective of this document is to identify/clarify the following: | The objective of this document is to identify/clarify the following: | ||

| - | * What are these specific | + | * What are these specific iptables rules? |

* Why do we care? and, When should we care? | * Why do we care? and, When should we care? | ||

* Does it matter if we lose these rules? | * Does it matter if we lose these rules? | ||

| Line 73: | Line 72: | ||

===The test setup=== | ===The test setup=== | ||

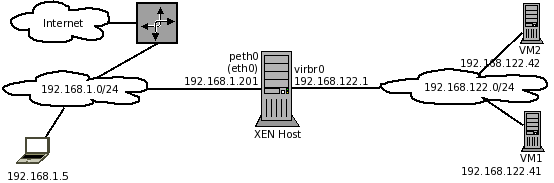

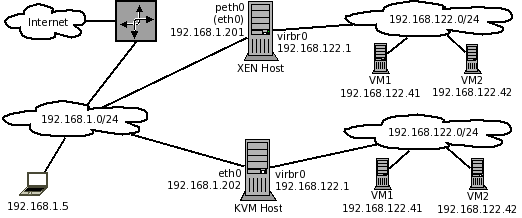

| - | In our test setup, we have two Dell Optiplex PCs, and a laptop to access them, and their VMs | + | In our test setup, we have two Dell Optiplex PCs, and a laptop to access them, and their VMs. All hardware is connected to a switched network. The network is also connected to internet, through DSL. Both Dell PCs have CENTOS 5.5 x86_64, installed on them, and were updated using the "yum update" command. One of them is XEN host, and the other is KVM host. |

* XENhost (192.168.1.201) [CENTOS 5.5 64bit] | * XENhost (192.168.1.201) [CENTOS 5.5 64bit] | ||

* KVMhost (192.168.1.202) [CENTOS 5.5 64bit] | * KVMhost (192.168.1.202) [CENTOS 5.5 64bit] | ||

| - | * Laptop (192.168.1.5) [Fedora 14. OS on the client machine is irrelevant to the discussion] | + | * Laptop (192.168.1.5) [Fedora 14. OS on the client machine is irrelevant to the discussion.] |

| - | Note: Though the IPs from 192.168.1.0/24 network are actually non- | + | Note: Though the IPs from 192.168.1.0/24 network are actually non-route-able private-IPs, still, for the sake of our example/test setup, we will use the term "Public IP" for them, most of the time, in the text below. The IPs from 192.168.122.0/24 network will be considered private. |

The diagram below, shows the setup described above. | The diagram below, shows the setup described above. | ||

| Line 102: | Line 101: | ||

* libvirt-0.6.3-33.el5_5.3.x86_64 | * libvirt-0.6.3-33.el5_5.3.x86_64 | ||

* libvirt-python-0.6.3-33.el5_5.3.x86_64 | * libvirt-python-0.6.3-33.el5_5.3.x86_64 | ||

| - | * iptables-1.3.5-5.3.el5_4.1.x86_64.rpm <i>[Remained | + | * iptables-1.3.5-5.3.el5_4.1.x86_64.rpm <i>[Remained unchanged]</i> |

===Problem analysis=== | ===Problem analysis=== | ||

| - | ====Example/simple firewall/iptables rules on our XEN server==== | + | ====Example/simple firewall/iptables rules on our XEN/KVM server==== |

| - | To analyse the problem, first we have created a very simple iptables | + | To analyse the problem, first we have created a very simple iptables rule-set. The LOG rules are added to all chains of nat and filter tables. i.e. INPUT, FORWARD and OUTPUT chains of the filter table, and PREROUTING, POSTROUTING and OUTPUT chains of nat table. These rules do nothing, except logging any traffic that passes through that particular chain. There is no advantage of doing so (logging the traffic) in our scenario. They act as a kind of markers. If later on, these rules disappear, or get changed, it will prove our point, that something is indeed messing with them; and we need to find the culprit. |

Note: There is an OUTPUT chain in filter table, and there is an OUTPUT chain in the nat table. They are not the same. They are two different chains. | Note: There is an OUTPUT chain in filter table, and there is an OUTPUT chain in the nat table. They are not the same. They are two different chains. | ||

| - | Here is our | + | Here is our rule-set. Notice that we also have a rule to block any incoming SMTP request to this server, solely for the sake of example. |

<pre> | <pre> | ||

[root@xenhost ~]# iptables -L | [root@xenhost ~]# iptables -L | ||

| Line 167: | Line 166: | ||

</pre> | </pre> | ||

| - | It is important to note, that | + | It is important to note, that a server, which is placed-on / connected-to Internet, normally controls only/mainly the incoming traffic. An internet web server, for example, is normally protected by a host-firewall, such as iptables rules, configured on the server itself, to restrict/control traffic arriving on it's INPUT chain. It is important to note that since the server administrator "trusts" the server itself, it does not have to control the outgoing traffic, which is the OUTPUT chain. Thus no rules are configured on the OUTPUT chain. It is also important to note, that since the server is the end-point of any two way internet communication, there is never a need to configure any rules on the FORWARD chain, nor the PREROUTING, POSTROUTING and OUTPUT chains in the nat table. |

| - | In case of a XEN or KVM host, we would be having one or more virtual machines, behind the said server. The traffic to/from the VMs will have to pass through the FORWARD chain on the physical host. Thus FORWARD chain has significant importance in this case, and needs to be both protected against abuse | + | In case of a XEN or KVM host, we would be having one or more virtual machines, behind the said server. The traffic to/from the VMs, to/from and outside-server/Internet, will have to pass through the FORWARD chain on the physical host. Thus FORWARD chain has significant importance in this case, and needs to be both protected against abuse; and, at the same time, facilitate traffic between VMs and the physical host (and the outside world). Since the VMs in our particular case are on a private network, inside the physical host, we would certainly need PREROUTING rules to redirect (DNAT) any traffic towards the VMs, to facilitate any traffic coming from the Internet. Such as traffic coming in on port 80 on the public IP of this physical host, may need to be forwarded to VM1, which can be a virtualized web server. Also SMTP, POP and IMAP may need to be forwarded to VM2, which can be a virtualized mail server. |

The POSTROUTING chain also has a significant role in our case, because any traffic coming out of the VMs, going towards the internet, will need to be translated to the IP of the public interface of the physical host. We normally need SNAT or MASQUERADE rules here. | The POSTROUTING chain also has a significant role in our case, because any traffic coming out of the VMs, going towards the internet, will need to be translated to the IP of the public interface of the physical host. We normally need SNAT or MASQUERADE rules here. | ||

| - | + | You should always follow the principle of not hosting any un-necessary services on the physical host itself, such as SMTP, HTTP, etc etc. However, there would still be a need to protect this physical host from any malicious traffic and attacks, directly targeted for it. Such as various forms of ICMP attacks, etc. | |

| - | Note, that we do not intend to protect the virtual machines hosted on this server, using the iptables rules on the physical host. That is, (a) very bad practice, as it complicates the firewall rules on the physical host, for each time a VM is added/removed/started/shutdown, (b) it over-loads the server to perform in the firewall role, in addition to managing the VMs inside it. The best practice is to let the physical host do only the VM management | + | Note, that we do not intend to protect the virtual machines hosted on this server, using the iptables rules on the physical host. That is, (a) very bad practice, as it complicates the firewall rules on the physical host, for each time a VM is added/removed/started/shutdown, (b) it over-loads the host server to perform in the firewall role, in addition to managing the VMs inside it. The best practice is to let the physical host do only the VM management; and protect the virtual machines using the host firewalls on the VMs themselves. If resources on the physical host permit, then an additional VM can be created working solely as a firewall for all the VMs. However, this is beyond the scope of this paper. |

====The default iptables rules on a XEN physical host==== | ====The default iptables rules on a XEN physical host==== | ||

| - | + | Now, we discuss the default iptables rules found on a XEN host. For the time being, for the sake of ease of understanding, we have stopped the iptables service on this host. This will help us observe, what iptables rules are set up, when a XEN or KVM host boots up. | |

| - | For the time being, for the sake of ease of understanding, we have stopped the iptables service. This will help us observe, what iptables rules are set up, when a XEN or KVM host boots up. | + | |

Below are the iptables rules found on a XEN host. Note that there is no VM running on the host at this time. | Below are the iptables rules found on a XEN host. Note that there is no VM running on the host at this time. | ||

| Line 328: | Line 326: | ||

It is important to note, that in the two examples above, iptables service was disabled only to avoid possible confusion, that the iptables rules shown may have been coming from the iptables service. Otherwise, we do not recommend that you disable your iptables service. If the default iptables rules setup by the iptables service are not suitable for your particular case, then you can adjust them as per your needs. The bottom line is, that you must have some level of protection against unwanted traffic. | It is important to note, that in the two examples above, iptables service was disabled only to avoid possible confusion, that the iptables rules shown may have been coming from the iptables service. Otherwise, we do not recommend that you disable your iptables service. If the default iptables rules setup by the iptables service are not suitable for your particular case, then you can adjust them as per your needs. The bottom line is, that you must have some level of protection against unwanted traffic. | ||

| - | Another point to be noted here is that, ideally, on a physical host, you should not be running any publicly accessible service (a.k.a. public serving service) other than ssh | + | Another point to be noted here is that, ideally, on a physical host, in a production environment, you should not be running any publicly accessible service (a.k.a. public serving service) other than ssh. It means that you should not use your physical host to serve out web pages/websites , or run mail/FTP services, etc. You do not want that a cracker exploits any of the extra services' vulnerabilities on your physical host, gain root access, and in-turn, gain access to <b>"all"</b> virtual machines hosted on this physical host. Even SSH should be used with key based authentication only, further restricted to be accessed only from the IP addresses, of the locations you manage your physical hosts from. If possible, you should also consider running your physical host and the VMs, on top of SELinux. |

====Understanding the rules file created by iptables-save command==== | ====Understanding the rules file created by iptables-save command==== | ||

| - | + | Many people consider the output of iptables-save to be very cryptic. <b>Actually, it is not that cryptic at all!</b> Here is a brief explanation of the iptables rules file, created by iptables-save command. We will use the default iptables rules created by the libvirtd service, saved in a file created at our XEN host. Please note, that the iptables rules on both XEN and KVM machines are found to be almost identical to each other. The only difference you will notice, is an extra "--physdev-in" rule on the XEN host. | |

| - | Many people consider the output of iptables-save to be very cryptic. <b>Actually, it is not that cryptic at all!</b> Here is a brief explanation of the iptables rules file, created by iptables-save command. We will use the default iptables rules created by the libvirtd service, saved in a file created at our XEN host. | + | |

<b>Note 1:</b> A small virtual machine was created inside XEN host and was started before executing the commands shown below. | <b>Note 1:</b> A small virtual machine was created inside XEN host and was started before executing the commands shown below. | ||

| Line 368: | Line 365: | ||

* Lines starting with : (colon) are the name of chains. These lines contain following four pieces of information about a chain. ":ChainName POLICY [Packets:Bytes]" | * Lines starting with : (colon) are the name of chains. These lines contain following four pieces of information about a chain. ":ChainName POLICY [Packets:Bytes]" | ||

** The name of the chain right next to the starting colon. e.g. "INPUT" , or "PREROUTING" | ** The name of the chain right next to the starting colon. e.g. "INPUT" , or "PREROUTING" | ||

| - | ** The default policy of the chain. e.g. ACCEPT or DROP | + | ** The default policy of the chain. e.g. ACCEPT or DROP. 99% of time, you will see ACCEPT here. (Note: REJECT cannot be used as a default policy of any chain.) |

** The two values inside the square brackets are number of packets passed through this chain, so far, as well as the number of bytes. e.g. [364:41916] means 364 packets "or" 41916 bytes, have passed through this chain till this point in time. | ** The two values inside the square brackets are number of packets passed through this chain, so far, as well as the number of bytes. e.g. [364:41916] means 364 packets "or" 41916 bytes, have passed through this chain till this point in time. | ||

* The lines starting with a - (hyphen/minus sign) are the actual rules, which you put in here. "-A" would mean "Add" the rule. "-I" (eye) would mean "Insert" the rule. | * The lines starting with a - (hyphen/minus sign) are the actual rules, which you put in here. "-A" would mean "Add" the rule. "-I" (eye) would mean "Insert" the rule. | ||

| Line 374: | Line 371: | ||

As you notice, these rules are no different than the standard rules you type on the command line, or in any shell script. The limitation of this style of writing rules (as shown here) is, that you cannot use loops and conditions, as you would normally do in a shell script. | As you notice, these rules are no different than the standard rules you type on the command line, or in any shell script. The limitation of this style of writing rules (as shown here) is, that you cannot use loops and conditions, as you would normally do in a shell script. | ||

| - | ====More details on | + | ====More details on the rules file created by iptables-save command==== |

For this explanation, the following ASCII version of Figure 3, should be helpful. | For this explanation, the following ASCII version of Figure 3, should be helpful. | ||

| Line 415: | Line 412: | ||

* Line 05 defines a rule in the POSTROUTING chain in the "nat" table. It says that any traffic originating from 192.168.122.0/255.255.255.0 network, and trying to reach any other network but itself (-d ! 192.168.122.0/255.255.255.0) should be MASQUERADEd. In simple words, if there is any traffic coming from a VM (because only a VM can be on this 192.168.122.0/24 network), trying to go out from the physical LAN interface (eth0) of the physical host , must be masqueraded. This makes sense, as we don't know what will be the IP of the physical interface of the physical host. This rule facilitates the traffic to go out. | * Line 05 defines a rule in the POSTROUTING chain in the "nat" table. It says that any traffic originating from 192.168.122.0/255.255.255.0 network, and trying to reach any other network but itself (-d ! 192.168.122.0/255.255.255.0) should be MASQUERADEd. In simple words, if there is any traffic coming from a VM (because only a VM can be on this 192.168.122.0/24 network), trying to go out from the physical LAN interface (eth0) of the physical host , must be masqueraded. This makes sense, as we don't know what will be the IP of the physical interface of the physical host. This rule facilitates the traffic to go out. | ||

| - | : Note: This is a single MASQUERADE rule in the default (non-updated) CENTOS 5.5 installation. If you update your installation using "yum update", you will see libvirtd adding three MASQUERADE rules here, instead of one. ( | + | : Note: This is a single MASQUERADE rule found in the default (non-updated) CENTOS 5.5 installation. If you update your installation using "yum update", you will see libvirtd adding three MASQUERADE rules here, instead of one. (The iptables listing shown here, was obtained from our XEN host, prior to updating it). The new three rules provide essentially the same functionality, and are listed below for reference: |

<pre> | <pre> | ||

-A POSTROUTING -s 192.168.122.0/255.255.255.0 -d ! 192.168.122.0/255.255.255.0 -p tcp -j MASQUERADE --to-ports 1024-65535 | -A POSTROUTING -s 192.168.122.0/255.255.255.0 -d ! 192.168.122.0/255.255.255.0 -p tcp -j MASQUERADE --to-ports 1024-65535 | ||

| Line 423: | Line 420: | ||

** Briefly, the first two POSTROUTING rules shown above, MASQUERADE the outgoing TCP and UDP traffic to the IP of the public interface, making sure that the ports are rewritten only using the port-range between 1024 and 65535. The third rule takes care of other outgoing traffic, such as ICMP. | ** Briefly, the first two POSTROUTING rules shown above, MASQUERADE the outgoing TCP and UDP traffic to the IP of the public interface, making sure that the ports are rewritten only using the port-range between 1024 and 65535. The third rule takes care of other outgoing traffic, such as ICMP. | ||

| - | * Line 06 and 21: The word COMMIT indicates the end of list of rules for a particular | + | * Line 06 and 21: The word COMMIT indicates the end of list of rules for a particular iptables "table". |

| - | * Lines 11 to 14: Virtual machines need a mechanism to get IP automatically and name resolution. DNSMASQ is the small service running on the | + | * Lines 11 to 14: Virtual machines need a mechanism to get IP automatically, and to do name resolution. DNSMASQ is the small service running on the physical host, serving both DNS and DHCP requests coming in from the VMs only. The DNSMASQ service on this physical host is not reachable over the physical LAN by any other physical host. It does not interfere with any DHCP service running elsewhere on the physical LAN. Lines 11 to 14 allow this incoming traffic from the VMs to arrive on the virbr0 interface of the physical host. |

| - | * Line 15: In case some traffic originated from a VM, and went out on the internet (because of line05), it will now want to reach back to the VM. For example, on the VM, you tried to pull an httpd.rpm file from a CENTOS mirror. | + | : <b>Note:</b> It is good to have DHCP service available on the physical host. This helps in initial provisioning / installation of VMs. However, if you have a production setup, you should setup a sensible/fixed IP for each VM, manually, after initial provisioning. |

| - | * Line 16: | + | * Line 15: In case some traffic originated from a VM, and went out on the internet (because of line05), it will now want to reach back to the VM. For example, on the VM, you tried to pull an httpd-x.y.rpm file from a CENTOS mirror. For this, an HTTP request is originated from this VM, reaches the CENTOS mirror (on the internet). Now the packets related to this transaction needs to be allowed back in, so they can reach the VM. This requires a rule in the FORWARD chain, shown in line 15, which says that any traffic going to any VM on 192.168.122.0/255.255.255.0 network, exiting through/towards the virbr0 interface of the physical host, and is RELATED to some previous traffic, must be allowed. Without this, the return traffic/packets will never reach back the VM, and you will get all sorts of weird time-outs, on your VM. |

| + | * Line 16: This line says that any traffic coming from virbr0, having source IP address from the 192.168.122.0/255.255.255.0 network scheme, trying to go across the FORWARD chain (to go anywhere elsewhere), must be ACCEPTed. Basically this is the line/rule, which transports a packet from virbr0 to eth0 (in this single direction only). Notice that this rule does not specify the state of the packet passing through the chain. It's purpose is to facilitate any traffic from a VM, going outside. This traffic can be of type NEW, originating from any of the VMs, or, it can be a return traffic of the type RELATED/ESTABLISHED, related to some previous communication, coming from the VM, going outside. Since this rule does not specify state of the packet, it serves both purposes. | ||

* Line 17: This is simply a multi-direction facilitator for all VMs connected to one virtual network. For example, VM1 and VM3 want to exchange some data on TCP/UDP/ICMP, their traffic would naturally traverse the virtual switch, virbr0. This line/rule allows that traffic to be ACCEPTed. | * Line 17: This is simply a multi-direction facilitator for all VMs connected to one virtual network. For example, VM1 and VM3 want to exchange some data on TCP/UDP/ICMP, their traffic would naturally traverse the virtual switch, virbr0. This line/rule allows that traffic to be ACCEPTed. | ||

| - | So far, we are able to send the traffic | + | So far, in the discussion, we have observed/understood the following: |

| + | * We we are able to send the traffic outside from a VM, irrespective of connection-state. | ||

| + | * We are able to receive the RELATED/ESTABLISHED traffic back to VM. | ||

| - | * Line 18: This line is read as : Any traffic coming from any source address, and going out to outgoing interface virbr0, will be rejected with a ICMP "port unreachable" message. | + | Lets continue reading. |

| + | |||

| + | * Line 18: This line is read as : Any traffic coming from any source address, and going out to outgoing interface virbr0, will be rejected with a ICMP "port unreachable" message. We have already dealt with traffic coming in from virbr0, or any of the other VMs on the same private subnet, in the iptables rules, before #18. This rule is for the traffic coming from "outside network" / "physical LAN". That means, any traffic coming/originated from outside, coming in for a VM, will be REJECTed. In other words, any traffic which could not satisfy the rules so far, and interested in reaching the VMs, going towards the virbr0 interface, is REJECTed. For example, you start simple web service on port 80 on VM1. If you setup a port to be forwarded from the physical interface of your physical host, to this port 80 on the VM, using DNAT, and try to reach that port, you access will be REJECTed because of this rule! | ||

* Line 19: Any traffic coming in from any VM, through virbr0 interface and trying to go out from the physical interface of the physical host (eth0) will be REJECTed. For example, in line /rule 16 we saw that any traffic coming in from a VM on 192.168.122.0/24 network, and trying to go out the physical interface of the physical machine is allowed. True, but please note, that is only allowed, when the source IP is from the 192.168.122.0/24 network! If there is a VM on the same virtual switch/bridge (virbr0), but with different IP, (say 10.1.1.1) , and it tries to go through the physical host towards the other side, it would be denied access. This is the last iptables rule as defined by libvirt. The next rule is from XEN. | * Line 19: Any traffic coming in from any VM, through virbr0 interface and trying to go out from the physical interface of the physical host (eth0) will be REJECTed. For example, in line /rule 16 we saw that any traffic coming in from a VM on 192.168.122.0/24 network, and trying to go out the physical interface of the physical machine is allowed. True, but please note, that is only allowed, when the source IP is from the 192.168.122.0/24 network! If there is a VM on the same virtual switch/bridge (virbr0), but with different IP, (say 10.1.1.1) , and it tries to go through the physical host towards the other side, it would be denied access. This is the last iptables rule as defined by libvirt. The next rule is from XEN. | ||

| - | Note 1: Rules 18 and 19, shown here, act as a default <b>reject-all</b> or <b>drop-all</b> policy for the FORWARD chain. | + | <b>Note 1:</b> Rules 18 and 19, shown here, act as a default <b>reject-all</b> or <b>drop-all</b> policy for the FORWARD chain. |

| - | Note 2: The PHYSDEV match rule, has it's own explanation, purely related to XEN. Thus it is discussed separately in the next section. | + | |

| + | <b>Note 2:</b> The PHYSDEV match rule, has it's own explanation, purely related to XEN. Thus it is discussed separately in the next section. | ||

| + | |||

| + | <b>Note: 3:</b> The rules, from sequence #15 to #20, (all FORWARD rules listed above), are totally un-necessary, "if" the policy of the FORWARD chain is set to ACCEPT, and there is no other rule restricting any traffic. You will still be able to communicate with all VMS, from outside to inside, inside to outside, and VM to VM. This is explained in the <i>Trivia</i> section at the end of this paper. | ||

| + | |||

====The PHYSDEV match rule==== | ====The PHYSDEV match rule==== | ||

| - | * Line 20: This is interesting. First, notice that this rule is not part of the default libvirt rule-set. Instead, when a VM on XEN physical host was powered on, this rule got added to the rule-set. (This does not happen on KVM host). When the virtual machine is shutdown, this line gets removed as well. The code controlling this | + | * Line 20: This is interesting. First, notice that this rule is not part of the default libvirt rule-set. Instead, when a VM on XEN physical host was powered on, this rule got added to the rule-set. (This does not happen on KVM host). When the virtual machine is shutdown, this line gets removed as well. The code controlling this behaviour resides in /etc/xen/scripts/vif-common.sh file. |

** "PHYSDEV" is a special match module, made available in 2.6 kernels. It is used to match the bridge's physical in and out ports. Its basic usage is simple. e.g. " iptables -m physdev --physdev-in <bridge-port> -j <TARGET>" . It is used in situations where an interface may, or may not, (or may never), have an IP address. Check the iptables man page for more detail on "physdev". | ** "PHYSDEV" is a special match module, made available in 2.6 kernels. It is used to match the bridge's physical in and out ports. Its basic usage is simple. e.g. " iptables -m physdev --physdev-in <bridge-port> -j <TARGET>" . It is used in situations where an interface may, or may not, (or may never), have an IP address. Check the iptables man page for more detail on "physdev". | ||

** In XEN, for each virtual machine, there is a <b><i>vifX.Y</i></b>. A so called / virtual patch cable runs from the virtual machine to this port on the bridge. In the (XEN) example we are following, VM1 (aka domain-1, or domain with id #1), is connected to the virtual bridge on the physical host, on <b>vif1.0</b> port. Here is the rule for reference. | ** In XEN, for each virtual machine, there is a <b><i>vifX.Y</i></b>. A so called / virtual patch cable runs from the virtual machine to this port on the bridge. In the (XEN) example we are following, VM1 (aka domain-1, or domain with id #1), is connected to the virtual bridge on the physical host, on <b>vif1.0</b> port. Here is the rule for reference. | ||

| Line 445: | Line 451: | ||

</pre> | </pre> | ||

** Since these iptables rules (and rule #20 in particular) are added at the XEN (physical) host, we will read and understand the physdev-in and physdev-out, taking XEN host as a reference. Thus, physical-device-in (--physdev-in vif1.0) means that traffic coming in (received) from vif1.0 port on the bridge, towards the XEN host. | ** Since these iptables rules (and rule #20 in particular) are added at the XEN (physical) host, we will read and understand the physdev-in and physdev-out, taking XEN host as a reference. Thus, physical-device-in (--physdev-in vif1.0) means that traffic coming in (received) from vif1.0 port on the bridge, towards the XEN host. | ||

| - | ** The script /etc/xen/scripts/vif-common.sh has facility to add this rule either at the end of the current | + | ** The script /etc/xen/scripts/vif-common.sh has facility to add this rule either at the end of the current rule-set of the physical host, or, at the top of the rule-set of the physical host. This is do-able by changing the "-A" to "-I" in this script file. Here is the part of the script for reference: |

<pre> | <pre> | ||

| Line 461: | Line 467: | ||

. . . | . . . | ||

</pre> | </pre> | ||

| - | <b>Note:</b> The intended purpose of this (PHYSDEV) rule (line #20), as designed by XEN was: to make sure that whatever your previous firewall rules on the physical host, (assuming they are "sane"), the VM can always send traffic to/through the physical host, primarily over the FORWARD chain. However, on RedHat and | + | <b>Note:</b> The intended purpose of this (PHYSDEV) rule (line #20), as designed by XEN was: to make sure that whatever your previous firewall rules on the physical host, (assuming they are "sane"), the VM can always send traffic to/through the physical host, primarily over the FORWARD chain. However, on RedHat and derivatives (RHEL, CENTOS, Fedora), the PHYSDEV rules have no effect, because of certain kernel parameters. It was proved during our testing, this rule doesn't seem to register any traffic, with any traffic coming in from vif1.0, or going out to vif1.0. During some tests, it was made sure that the PHYSDEV rule was at the top of FORWARD chain (using the c="-I"), instead of being added to the end of the (FORWARD chain) rules, to ensure any possible match, right in the beginning, when a packet starts to traverse through the FORWARD chain. Still, we could not get any traffic matching this rule. And the counters at this rule always remained at zero. This is solely because of the fact, that certain sysctl settings prevent bridged traffic to pass through the iptables. |

<pre> | <pre> | ||

| Line 478: | Line 484: | ||

</pre> | </pre> | ||

| - | + | The directives configured in /etc/sysctl.conf file, shown below, prevents the (--physdev-in) rule from passing any traffic through netfileter (iptables). Basically the bridge is prevented to call netfilter (iptables) calls, as it conflicts with the way libvirt sets up bridge rules. Therefore, the wise-guys at RedHat, and contributors around the world, suggested to configure iptables, to not allow PHYSDEV (brdiged devices) traffic to pass through iptables. The following code is what you will find in your /etc/sysctl.conf file, which is responsible for this behaviour. | |

<pre> | <pre> | ||

| Line 491: | Line 497: | ||

</pre> | </pre> | ||

| - | The /etc/xen/xend-config.sxp specifies a directive < | + | From the URL, http://www.linuxfoundation.org/collaborate/workgroups/networking/ip-sysctl#.2Fproc.2Fsys.2Fnet.2Fbridge , here is some help on these configuration directives: |

| + | |||

| + | <b>/proc/sys/net/bridge</b> | ||

| + | |||

| + | * <b><i>bridge-nf-call-arptables</i></b> Possible values (1/0). 1: pass bridged ARP traffic to arptables FORWARD chain. 0: disable this (default). | ||

| + | * <b><i>bridge-nf-call-iptables</i></b> Possible values (1/0). 1: pass bridged IPv4 traffic to iptables chains. 0: disable this (default). | ||

| + | * <b><i>bridge-nf-call-ip6tables</i></b> Possible values (1/0). 1: pass bridged IPv6 traffic to ip6tables' chains. 0: disable this (default). | ||

| + | * <b><i>bridge-nf-filter-vlan-tagged</i></b> Possible values 1/0). 1: pass bridged vlan-tagged ARP/IP traffic to arptables/iptables. 0: disable this (default). | ||

| + | |||

| + | The /etc/xen/xend-config.sxp specifies a directive <i>(network-script network-bridge)</i>, which means that XEN will set up the network, of type: network-bridge. The other options are <i>network-nat</i> and <i>network-route</i>. The directive <i>network-bridge</i>, expects a bridge (xenbr0), sharing the physical network device (peth0). Thus, XEN's PHYSDEV match rule, in the situation, where libvirt is providing the <i>network-nat</i> service, (not XEN), is totally useless/un-necessary, in the first place. | ||

| - | Note: If you are using a pure XEN based setup, in which you are not using libvirt at all, (i.e. libvirtd service is disabled), then, you should expect to see PHYSDEV rules | + | <b>Note:</b> If you are using a pure XEN based setup, in which you are not using libvirt at all, (i.e. libvirtd service is disabled), then, you should not only expect to see PHYSDEV rules; you have to make sure, that the bridges you set up, in your XEN host, are able to pass their traffic to iptables. In that case you will need to "enable" the various "net.bridge.bridge-nf-call-*" settings in your /etc/sysctl.conf file, by setting value of the directives to "1". |

For our discussion in this paper, we will keep the "net.bridge.bridge-nf-call-*" settings as "disabled". And we will not bother about the PHYSDEV rules in our rule-set as well. They can exist, at the top of the FORWARD chain, or at the bottom, or does not exist at all. | For our discussion in this paper, we will keep the "net.bridge.bridge-nf-call-*" settings as "disabled". And we will not bother about the PHYSDEV rules in our rule-set as well. They can exist, at the top of the FORWARD chain, or at the bottom, or does not exist at all. | ||

| - | ====Booting the XEN host with both iptables and libvirtd services enabled==== | + | ====Booting the XEN/KVM host with both iptables and libvirtd services enabled==== |

So far, we have covered all the basics of how the various iptables rules work on a XEN or KVM hsot. Now is the time to boot the XEN host with the following services enabled: iptables, libvirtd and xend. | So far, we have covered all the basics of how the various iptables rules work on a XEN or KVM hsot. Now is the time to boot the XEN host with the following services enabled: iptables, libvirtd and xend. | ||

| - | It would be interesting to note that the default startup sequence of key services is as following: | + | It would be interesting to note that the default startup sequence of key init services, on a CENTOS host, is as following: |

<pre> | <pre> | ||

| Line 532: | Line 547: | ||

</pre> | </pre> | ||

| - | Note: The only VM at the moment is is VM1, and is configured to "not" start at system boot, on this XEN host. Thus, we will not see it up, nor will we see the related PHYSDEV rule at the moment. | + | <b>Note:</b> The only VM at the moment is is VM1, and is configured to "not" start at system boot, on this XEN host. Thus, we will not see it up, nor will we see the related PHYSDEV rule at the moment. |

| - | Before rebooting, recall that we have | + | Before rebooting, recall that we have simple iptables rules configured in /etc/sysconfig/iptables file, which just logs the traffic, and one rule blocks the incoming SMTP traffic. When the system is rebooted, we will analyse, which rules got disappeared, or displaced, etc. For a quick re-cap, here is the simple iptables rules file we have created: |

<pre> | <pre> | ||

| Line 563: | Line 578: | ||

</pre> | </pre> | ||

| - | ====Post boot analysis of iptables rules on the XEN host, and Conclusion==== | + | ====Post boot analysis of iptables rules on the XEN/KVM host, and Conclusion==== |

After the system booted up, we checked the rules, and below is what we see: | After the system booted up, we checked the rules, and below is what we see: | ||

<pre> | <pre> | ||

| Line 609: | Line 624: | ||

</pre> | </pre> | ||

| - | As you would notice, the following chains are altered by libvirtd: INPUT, FORWARD and POSTROUTING. Also notice the rules we configured in /etc/sysconfig/iptables are enabled, but are "pushed down" by the iptables rules applied by libvirt. After | + | As you would notice, the following chains are altered by libvirtd: INPUT, FORWARD and POSTROUTING. Also notice the rules we configured in /etc/sysconfig/iptables are enabled, but are "pushed down" by the iptables rules applied by libvirt. After analysing these rules, we deduce the following: |

| - | # The FORWARD chain has two < | + | # The FORWARD chain has two <i>reject-all</i> type of rules introduced by libvirtd. And we find our innocent rules are pushed below them. Note that in a single server scenario, we have no use of the FORWARD chain anyway. However, in case we are using XEN or KVM, and have VMs on the private network (virbr0), then the rules are restrictive. The rules added by libvirt restrict the traffic flow on the FORWARD chain. That is, traffic is not allowed to come in from the outside host/network, to the VMs (on virbr0). Worst is that any rules we configured are pushed down in the FORWARD chain, below the <i>reject-all</i> rules inserted by libvirtd. So, even if we configure certain rules to allow NEW traffic flow from eth0 to virbr0 on the FORWARD chain, our rules will be pushed down. <b>This is a point of concern for us. We have to find a solution for this.</b> |

| - | # The four rules inserted by libvirt in the (beginning of) INPUT chain, are just making sure that the DHCP and DNS traffic from the VMs have no issue reaching the physical host. The INPUT chain does not have | + | # The four rules inserted by libvirt in the (beginning of) INPUT chain, are just making sure that the DHCP and DNS traffic from the VMs have no issue reaching the physical host. The INPUT chain does not have <i>reject-all</i> sort of rules at the bottom of the chain, as we saw in the FORWARD chain. Our example SMTP-block and LOG rules, still exists at the bottom of the INPUT chain. This means, we can have whatever rules we want to have in the INPUT chain, for the physical host's protection. That also means, we can use the /etc/sysconfig/iptables file to configure the protection rules for the physical host, and be rest assured that they will not be affected. Good. No issues here. |

# The OUTPUT chain in both nat and filter tables, has no new rules from libvirt. The OUTPUT chain is otherwise of no importance to us. Thus, no issues here. | # The OUTPUT chain in both nat and filter tables, has no new rules from libvirt. The OUTPUT chain is otherwise of no importance to us. Thus, no issues here. | ||

# The POSTROUTING chain has MASQUERADE rules, which basically are the facilitators for the VMs on the private virtual network inside our physical host. The MASQUERADE rules make sure that any packets going out the outgoing interface, have their IP address replaced with the IP address of the public interface. We didn't want to have any rules configured in the POSTROUTING chain, so this is also a good thing. We don't have issues here. However, we have a small concern here. MASQUERADE is slower than SNAT. MASQUERADE queries the public interface for the IP, each time a packet wants to go outside to the internet, and then replaced the IP header of the outgoing packet with the public IP it obtained in the previous step. Public internet servers, normally have fixed public IP address. SNAT can be used instead of MASQUERADE. When SNAT is used, it is configured to always replace the IP header of the outgoing packet to a fixed IP. So the step to query the public interface is skipped, in SNAT case. Thus SNAT should be used in such scenarios. We hope to see, a configuration directive in future from libvirt, to change the MASQUERADE into SNAT, without getting involved into any tricks. | # The POSTROUTING chain has MASQUERADE rules, which basically are the facilitators for the VMs on the private virtual network inside our physical host. The MASQUERADE rules make sure that any packets going out the outgoing interface, have their IP address replaced with the IP address of the public interface. We didn't want to have any rules configured in the POSTROUTING chain, so this is also a good thing. We don't have issues here. However, we have a small concern here. MASQUERADE is slower than SNAT. MASQUERADE queries the public interface for the IP, each time a packet wants to go outside to the internet, and then replaced the IP header of the outgoing packet with the public IP it obtained in the previous step. Public internet servers, normally have fixed public IP address. SNAT can be used instead of MASQUERADE. When SNAT is used, it is configured to always replace the IP header of the outgoing packet to a fixed IP. So the step to query the public interface is skipped, in SNAT case. Thus SNAT should be used in such scenarios. We hope to see, a configuration directive in future from libvirt, to change the MASQUERADE into SNAT, without getting involved into any tricks. | ||

# The PREROUTING chain has no rules added by libvirt. This is simply beautiful. Because, this is where we would configure our traffic redirector (DNAT) rules (, in the /etc/sysconfig/iptables file). No issues here. | # The PREROUTING chain has no rules added by libvirt. This is simply beautiful. Because, this is where we would configure our traffic redirector (DNAT) rules (, in the /etc/sysconfig/iptables file). No issues here. | ||

| - | So by looking at our points above, we can conclude that we can configure certain iptables rules to manage traffic to/from the VMs, as well as protect the physical server itself from malicious traffic. The only aspect we cannot control from /etc/sysconfig/iptables file is the proper flow of NEW and RELATED/ESTABLISHED traffic between eth0 and virbr0. To control this, we need to do something manually. | + | So by looking at our points above, we can conclude that we can configure certain iptables rules to manage traffic to/from the VMs, as well as protect the physical server itself from malicious traffic. The only aspect we cannot control from /etc/sysconfig/iptables file is the proper flow of NEW and RELATED/ESTABLISHED traffic between eth0 and virbr0, on the FORWARD chain. To control this, we need to do something manually. |

| - | ===Solutions | + | ===Solutions=== |

We have analysed the iptables rules setup by libvirt, in depth. And we have reached to the conclusion, that there are not that many issues, if we want to access a particular VM (on the private network) of the publicly accessible physical host (XEN/KVM). To do that, we would need to configure some iptables rules of our own in the /etc/sysconfig/iptables file. There are two scenarios however. And we will deal with both. | We have analysed the iptables rules setup by libvirt, in depth. And we have reached to the conclusion, that there are not that many issues, if we want to access a particular VM (on the private network) of the publicly accessible physical host (XEN/KVM). To do that, we would need to configure some iptables rules of our own in the /etc/sysconfig/iptables file. There are two scenarios however. And we will deal with both. | ||

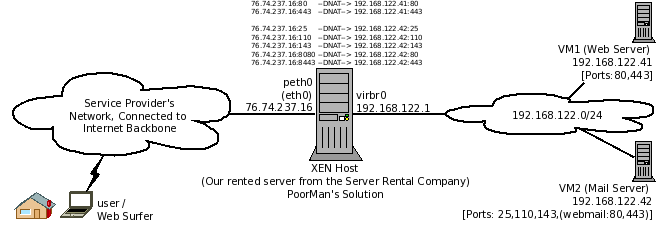

| - | * <b>The Poor-Man's setup</b>: In this setup, we have only one public IP for our server, and cannot afford to (or, don't want to) acquire another public IP for the same server. This may be suitable for situations, for example, where you just need to separate web server and mail server, as two separate VMs on the same host. Since the port requirements of these two VMs is totally separate, (except the webmail part), we don't have the requirement to acquire two additional public IPs for two VMs. We can simply redirect traffic for ports 80 and 443 to VM1 (web server), and traffic for ports 25, 110, 143 to VM2 (mail server). However this is a limited way to manage the publicly accessible services on private VMs. What if we have two, or three or more (virtual) web servers to access from the internet, all on the same physical host? Of-course, we can forward port 80 traffic to only one VM (web server), at a time. For other | + | * <b>The Poor-Man's setup</b>: In this setup, we have only one public IP for our server, and cannot afford to (or, don't want to) acquire another public IP for the same server. This may be suitable for situations, for example, where you just need to separate web server and mail server, as two separate VMs on the same host. Since the port requirements of these two VMs is totally separate, (except the webmail part, if there is any), we don't have the requirement to acquire two additional public IPs for two VMs. We can simply redirect traffic for ports 80 and 443 to VM1 (web server), and traffic for ports 25, 110, 143 to VM2 (mail server). However this is a limited way to manage the publicly accessible services on private VMs. What if we have two, or three, or more (virtual) web servers to access from the internet, all on the same physical host? Of-course, we can forward port 80 traffic to only one VM (web server), at a time. For other web-servers, we have to use non-standard port numbers to forward the traffic to the port 80 on VMs; such as forwarding port 8080 on the public interface of our XEN host to port 80 on VM2. Even if we do that, who will instruct the clients to use an additional ":8080" in the URL they are typing in their web-browsers? Clearly this is impractical approach, (with very limited usability), and is suitable for only one service / port redirection per physical host. Thus, appropriately named as <i>Poor-Man's Setup</i>. The following diagram will help explain more. In the diagram, notice that VM2 is a mail server, but it also hosts a webmail software (squirrelmail, etc), thus needing a web server running on port 80 and/or port 443. To make it reachable, we need a forwarding mechanism. The DNAT rules mentioned in the diagram are an example to achieve this. |

| + | |||

[[File:Libvirt-iptables-problem-solution-poorman-1.png|frame|none|alt=|Figure 5: The PoorMan's Setup. Notice the complicated/lengthy iptables rules.]] | [[File:Libvirt-iptables-problem-solution-poorman-1.png|frame|none|alt=|Figure 5: The PoorMan's Setup. Notice the complicated/lengthy iptables rules.]] | ||

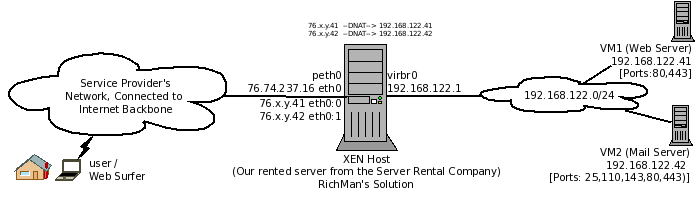

| - | * <b>The Rich-Man's setup</b>: In this setup, we have a physical host, and, ability/freedom/money to purchase extra IP addresses, as we please. (Note: You normally have to give a valid justification to the service provider, in case you are requesting additional IPs). You may, or may not have additional network card on this host. We will assume that you don't have an additional network card on this physical host, because that is the case with 99% of web servers, provided by the server rental companies. Adding an additional network card on the server is either not possible (for technical reasons/limitations), or is costly. In such case, the service provider may ask you to setup additional IP address on the sub-interface of your existing network card, assuming you are using Linux. (You wouldn't be reading this | + | * <b>The Rich-Man's setup</b>: In this setup, we have a physical host, and, ability/freedom/money to purchase extra IP addresses, as we please. Thus, appropriately named as <i>Rich-Man's Setup</i>. (Note: You normally have to give a valid justification to the service provider, in case you are requesting additional IPs). You may, or may not have additional network card on this host. We will assume that you don't have an additional network card on this physical host, because that is the case with 99% of web servers, provided by the server rental companies. Adding an additional network card on the server is either not possible (for technical reasons/limitations), or is costly. In such case, the service provider may ask you to setup additional IP address on the sub-interface of your existing network card, assuming you are using Linux. (You wouldn't be reading this paper, if you were using anything other than Linux anyway!). At this point, the reader may have a valid question, which is; <i>Why didn't we setup our VMs on this host, on xenbr0, instead of virbr0? That would have been lot easier, and there was never a need to discuss private network in the first place.</i> (Ah! If life was that simple!). This has been discussed in the beginning / introduction of this paper. Once again, for the sake of re-cap, the reason is, that the server rental companies like ServerBeach.com does not provide this mode of configuring VMs. Their billing system is not capable to handle traffic coming from two different MAC address of the same physical server. (VMs have different MAC addresses compared to the physical host). Thus, they want you to setup your VMs on the physical host's private network, setup a sub-interface of eth0, which will be eth0:0, and use the additional IP address they will give you on that sub-interface, and forward those public IPs to the private IPs on the VMs inside. This will make sure that no matter the traffic will arrive and leave on two different IP addresses, the MAC address will remain same, and thus no issues for billing. |

| - | + | <b>Note:</b> If you are wondering why the MAC addresses will remain same, here is a brief description. MAC addresses do not get forwarded beyond an ethernet segment. In other words, MAC addresses do not cross a router. Since the VMs are on the private network, on virbr0, and the public IPs are on eth0; the XEN/KVM host in this situation, basically acts as a NAT router, between these two networks. So any packet coming from a VM, going outwards through eth0, is MASQUERADEd, as well as loses it's MAC address. The hosts on network connected to eth0 of the XEN/KVM hosts see the MAC address of the physical host, in the packets coming from eth0. That is why some server rental companies, such as ServerBeach, asks you to setup your VMs in this way. Clever! | |

| + | The following diagram will help explain more. Notice that the iptables rules for the "RichMan's Setup" are much simpler than the ones used in "PoorMan's Setup". Also notice that in this (RichMan's) setup, the IP of the XEN host's eth0 (76.74.237.16) is not forwarded to any VM. Only the public IPs assigned on the two sub interfaces of eth0 are forwarded/DNATed. | ||

| + | <b>Note:</b> The virtual network cards on the VMs have their own MAC addresses. As per standards, the virtual network cards on XEN VMs must have the MAC addresses, with first three octects as: "00:16:3e". Those on KVM must have the first three octets of the MAC addresses, as: "54:52:00". | ||

| + | |||

| + | [[File:Libvirt-iptables-problem-solution-richman-1.png|frame|none|alt=|Figure 6: The RichMan's Setup. Notice the simplicity of iptables rules.]] | ||

| - | |||

| - | ==== | + | With the explanation for two types of setups out of the way, lets configure the "Poor-Man's" setup first. |

| - | Scenario: One XEN/KVM host with | + | ====Scenario 1: The Poor-Man's Setup, and The Solution==== |

| + | <b>Scenario:</b> One XEN/KVM host with two VMs installed inside it, on private network (virbr0). VM1 is configured as a web server, running on ports 80 and 443. VM2 is a mail server, offering services on ports 25,110,143, and 80,443 for the webmail interface. | ||

* XEN host's eth0 IP : 192.168.1.201 | * XEN host's eth0 IP : 192.168.1.201 | ||

* VM1 IP: 192.168.122.41 | * VM1 IP: 192.168.122.41 | ||

| Line 638: | Line 658: | ||

* Client / site visitor IP: 192.168.1.5 | * Client / site visitor IP: 192.168.1.5 | ||

| - | Objective: The VMs should be able to access the internet, for updates, etc. The VMs should also be accessible from the outside, using a public IP. VM1's webserver is serving a single/simple web page, index.html, having the text/content: "Private VM1 inside a XEN host" in it. This is what we should be able to see in our browser. VM2 is primarily running mail server components, such as Postfix and Dovecot. It also hosts a webmail software, squirrelmail, available through it's webserver running on standard port 80 and 443. | + | <b>Objective:</b> The VMs should be able to access the internet, for updates, etc. The VMs should also be accessible from the outside, using a public IP. VM1's webserver is serving a single/simple web page, index.html, having the text/content: "Private VM1 inside a XEN host" in it. This is what we should be able to see in our browser. VM2 is primarily running mail server components, such as Postfix and Dovecot. It also hosts a webmail software, squirrelmail, available through it's webserver running on standard port 80 and 443. VM2's webserver is serving a webpage with the content "WebMail on VM2 inside a XEN host". |

| - | Configuration: | + | <b>Configuration:</b> Most of the iptables rules are already configured by the libvirt service. Those rules will ensure that the VMs are capable of accessing the internet. We only need to add few DNAT rules on the PREROUTING chain, and few rules on the FORWARD chain. |

| - | Most of the iptables rules are already configured by the libvirt service. Those rules will ensure that the VMs are capable of accessing the internet. We only need to add | + | |

| + | First the PREROUTING rules: | ||

<pre> | <pre> | ||

# The following two rules are for directing traffic of port 80 and 443 to VM1. | # The following two rules are for directing traffic of port 80 and 443 to VM1. | ||

| Line 648: | Line 668: | ||

# iptables -t nat -A PREROUTING -p tcp -i eth0 --destination-port 443 -j DNAT --to-destination 192.168.122.41 | # iptables -t nat -A PREROUTING -p tcp -i eth0 --destination-port 443 -j DNAT --to-destination 192.168.122.41 | ||

# | # | ||

| - | # The following rules are | + | # The following rules are to directing traffic of ports 25,110,143. |

# We will also forward ports 8080 and 8443 to ports 80 and 443, respectively, on VM2 | # We will also forward ports 8080 and 8443 to ports 80 and 443, respectively, on VM2 | ||

# iptables -t nat -A PREROUTING -p tcp -i eth0 --destination-port 25 -j DNAT --to-destination 192.168.122.42 | # iptables -t nat -A PREROUTING -p tcp -i eth0 --destination-port 25 -j DNAT --to-destination 192.168.122.42 | ||

| Line 654: | Line 674: | ||

# iptables -t nat -A PREROUTING -p tcp -i eth0 --destination-port 143 -j DNAT --to-destination 192.168.122.42 | # iptables -t nat -A PREROUTING -p tcp -i eth0 --destination-port 143 -j DNAT --to-destination 192.168.122.42 | ||

# | # | ||

| - | # The following | + | # The following rules are to directing traffic of ports 8080,8443 to the web service on VM2. |

| + | # Notice the use of --to-destination IP:port syntax. | ||

# iptables -t nat -A PREROUTING -p tcp -i eth0 --destination-port 8080 -j DNAT --to-destination 192.168.122.42:80 | # iptables -t nat -A PREROUTING -p tcp -i eth0 --destination-port 8080 -j DNAT --to-destination 192.168.122.42:80 | ||

# iptables -t nat -A PREROUTING -p tcp -i eth0 --destination-port 8443 -j DNAT --to-destination 192.168.122.42:443 | # iptables -t nat -A PREROUTING -p tcp -i eth0 --destination-port 8443 -j DNAT --to-destination 192.168.122.42:443 | ||

</pre> | </pre> | ||

| - | Now we try to access VM1, by contacting the | + | Now we try to access VM1, by contacting the public IP of our physical host, from outside, and see the following "connection refused" message: |

<pre> | <pre> | ||

[kamran@kworkhorse tmp]$ wget http://192.168.1.201 -O index.html; cat index.html | [kamran@kworkhorse tmp]$ wget http://192.168.1.201 -O index.html; cat index.html | ||

| Line 667: | Line 688: | ||

</pre> | </pre> | ||

| - | This behaviour is because the | + | This behaviour is so, because of the REJECT rule at line #18, in the rule-set shown a little while ago. That rule blocks any traffic from outside the physical host, coming in from eth0, and going towards the private VMs, on virbr0. The default libvirt rules, only allow traffic from the VMs to the outside network. Not from the outside network to the VMs. Here is the iptables rule for reference. |

| + | <pre> | ||

| + | 18) -A FORWARD -o virbr0 -j REJECT --reject-with icmp-port-unreachable | ||

| + | </pre> | ||

| - | To avoid this from happening, we "insert" the following | + | To avoid this from happening, we will "insert" the following simple rule, at the top of the rules, in the FORWARD chain. This rule will allow any NEW traffic arriving from the internet from eth0 interface and let it get forwarded to all the VMs on the virbr0 interface. |

<pre> | <pre> | ||

# iptables -I FORWARD -i eth0 -o virbr0 -p tcp -m state --state NEW -j ACCEPT | # iptables -I FORWARD -i eth0 -o virbr0 -p tcp -m state --state NEW -j ACCEPT | ||

| - | |||

</pre> | </pre> | ||

| - | After inserting the | + | The state (NEW) of the traffic is not a must to mention. We can have a simpler rule, without specifying the state, and it will still work. Also, since all the common services run on TCP, we have restricted the traffic type to TCP. Again, you can have a simpler rule, to not specify the traffic type in terms of TCP/UDP/ICMP. You can also remove the restriction of incoming interface. This way, in case you have two physical network cards, you will be allowing traffic from both network cards to reach the VMs. Below is the simpler version of the same rule discussed just now. |

| + | <pre> | ||

| + | # iptables -I FORWARD -o virbr0 -j ACCEPT | ||

| + | </pre> | ||

| + | |||

| + | After inserting the rule shown above, we try to access the VM from outside, and see the following web-page, being pulled successfully: | ||

<pre> | <pre> | ||

[kamran@kworkhorse tmp]$ wget http://192.168.1.201 -O index.html; cat index.html | [kamran@kworkhorse tmp]$ wget http://192.168.1.201 -O index.html; cat index.html | ||

| Line 692: | Line 720: | ||

</pre> | </pre> | ||

| - | Success! As you can see, the index.html is displayed on the screen and it's contents read: "Private VM1 inside a XEN host" . We are able to successfully access | + | Success! As you can see, the index.html is displayed on the screen and it's contents read: "Private VM1 inside a XEN host" . We are able to successfully access VM1 inside the XEN/KVM physical host. Now, we try to pull the webpage from our second VM. This time we will use port 8080 to reach that host. |

| + | |||

| + | <pre> | ||

| + | [kamran@kworkhorse tmp]$ wget http://192.168.1.201:8080 -O index.html ; cat index.html | ||

| + | --2011-02-15 17:12:55-- http://192.168.1.201:8080/ | ||

| + | Connecting to 192.168.1.201:8080... connected. | ||

| + | HTTP request sent, awaiting response... 200 OK | ||

| + | Length: 33 [text/html] | ||

| + | Saving to: “index.html” | ||

| + | |||

| + | 100%[================================================================================>] 33 --.-K/s in 0s | ||

| + | |||

| + | 2011-02-15 17:12:55 (2.82 MB/s) - “index.html” saved [33/33] | ||

| + | |||

| + | Webmail on VM2 inside a XEN host | ||

| + | [kamran@kworkhorse tmp]$ | ||

| + | </pre> | ||

| + | |||

| + | Success! As you can see, the index.html is displayed on the screen and it's contents read: "Webmail on VM2 inside a XEN host" . We are able to successfully access VM2 as well, inside the XEN/KVM physical host. | ||

| + | |||

| + | Now we need to automate the solution. | ||

By executing a simple "iptables-save" command, we can capture all the rules in a file. And from there, we can edit the file as per our needs. | By executing a simple "iptables-save" command, we can capture all the rules in a file. And from there, we can edit the file as per our needs. | ||

| Line 700: | Line 748: | ||

</pre> | </pre> | ||

| - | Next, we edit this file and remove all such rules, which belong to libvirtd or xend. These are important to remove, otherwise, | + | Next, we edit this file and remove all such rules, which belong to libvirtd or xend. These are important to remove, otherwise, whenever libvirt service will start, it will add another set of (same) rules, doubling the size of the rule-set; which would be useless. |

First, here is what the /etc/sysconfig/iptables file looks like, when we used the iptables-save command above. | First, here is what the /etc/sysconfig/iptables file looks like, when we used the iptables-save command above. | ||

| Line 734: | Line 782: | ||

-A INPUT -i eth0 -p tcp -m tcp --dport 25 -j REJECT --reject-with icmp-port-unreachable | -A INPUT -i eth0 -p tcp -m tcp --dport 25 -j REJECT --reject-with icmp-port-unreachable | ||

-A FORWARD -i eth0 -o virbr0 -p tcp -m state --state NEW -j ACCEPT | -A FORWARD -i eth0 -o virbr0 -p tcp -m state --state NEW -j ACCEPT | ||

| - | |||

-A FORWARD -d 192.168.122.0/255.255.255.0 -o virbr0 -m state --state RELATED,ESTABLISHED -j ACCEPT | -A FORWARD -d 192.168.122.0/255.255.255.0 -o virbr0 -m state --state RELATED,ESTABLISHED -j ACCEPT | ||

-A FORWARD -s 192.168.122.0/255.255.255.0 -i virbr0 -j ACCEPT | -A FORWARD -s 192.168.122.0/255.255.255.0 -i virbr0 -j ACCEPT | ||

| Line 746: | Line 793: | ||

</pre> | </pre> | ||

| - | Below is our desired version. Notice that we have also removed the | + | Below is our desired version. Notice that we have also removed the RELATED/ESTABLISHED rule, and the rule next to it, from the FORWARD chain. They will get pushed down anyway, below the reject-all rules. So, no use here. We have also removed our example rules, LOG and SMTP. We added these rules for the sake of example only. Since their purpose is served, we have remove them. |

<pre> | <pre> | ||

| Line 765: | Line 812: | ||

# and the rule below will be pushed down, rendering it useless. | # and the rule below will be pushed down, rendering it useless. | ||

# You may want to add it to rc.local, or a script of your choice, which should run *after* xend is started. | # You may want to add it to rc.local, or a script of your choice, which should run *after* xend is started. | ||

| - | # -A POSTROUTING -s 192.168.122. | + | # -A POSTROUTING -s 192.168.122.0/255.255.255.0 -d ! 192.168.122.0/255.255.255.0 -j SNAT --to-source 192.168.1.201 |

COMMIT | COMMIT | ||

*filter | *filter | ||

| Line 775: | Line 822: | ||

</pre> | </pre> | ||

| - | As you would notice, in-essence we have just kept our traffic redirector rules in the PREROUTING chain. And rest of the file has been emptied. When the system boots next time, iptables service will load these | + | As you would notice, in-essence we have just kept our traffic redirector rules in the PREROUTING chain. And rest of the file has been emptied. When the system boots next time, iptables service will load these rules. Then, when the libvirt service runs, it will add it's default set of rules. The only rule left now is the one which move the traffic from outside, to the VMs across the FORWARD chain. We wish we could control that from the same /etc/sysconfig/iptables file. The solution is to either add this rules in /etc/rc.local file, or create a small simple <i>rc</i> script (a service file), and configure it to start right after libvirtd/xend service. |

| - | The readers might be thinking that we | + | The readers might be thinking that: <i>Can't we add all our custom rules to rc.local, or proposed new service file?</i> True. That is do-able. However, the /etc/sysconfig/iptables file is still an excellent place to add more rules easily, in case there is a need to, especially for the INPUT chain. It is easy to add server protection rules to it (this file), instead of managing them at different places. Besides, our NEW rule is totally generic in nature, and you will probably never need to change it throughout the service lifetime of your physical host. Same is the case with optional SNAT rule. So they can be kept in either rc.local, or a small service script. We will show both methods below. |

| + | |||

| + | <b>Note:</b> A very simple version of complete rule-set is shown at the end of the <i>Solutions</i> section, just in case you want to totally eliminate any rules from libvirtd or the iptables services, and want to setup everything of your own, at only one location. | ||

Method #1: /etc/rc.local | Method #1: /etc/rc.local | ||

| Line 789: | Line 838: | ||

iptables -I FORWARD -i eth0 -o virbr0 -p tcp -m state --state NEW -j ACCEPT | iptables -I FORWARD -i eth0 -o virbr0 -p tcp -m state --state NEW -j ACCEPT | ||

| - | |||

[root@xenhost ~]# | [root@xenhost ~]# | ||

</pre> | </pre> | ||

| Line 798: | Line 846: | ||

#!/bin/sh | #!/bin/sh | ||

# | # | ||

| - | # post-libvirtd. Sets up additional iptables rules, after libvirt is done adding it's rules. | + | # post-libvirtd-iptables. Sets up additional iptables rules, after libvirt is done adding it's rules. |

# | # | ||

# chkconfig: 2345 99 02 | # chkconfig: 2345 99 02 | ||

| - | # description: Inserts | + | # description: Inserts iptables rules to the FORWARD chain on a XEN/KVM Hypervisor. |

# Source function library. | # Source function library. | ||

| Line 808: | Line 856: | ||

start() { | start() { | ||

iptables -I FORWARD -i eth0 -o virbr0 -p tcp -m state --state NEW -j ACCEPT | iptables -I FORWARD -i eth0 -o virbr0 -p tcp -m state --state NEW -j ACCEPT | ||

| - | + | logger "iptables rules added to the FORWARD chain" | |

| - | logger " | + | |

} | } | ||

| Line 843: | Line 890: | ||

</pre> | </pre> | ||

| - | After the system boots up, start the | + | After the system boots up, start the VMs, and check the iptables rule-set: |

<pre> | <pre> | ||

[root@xenhost ~]# iptables -L | [root@xenhost ~]# iptables -L | ||

| Line 855: | Line 902: | ||

Chain FORWARD (policy ACCEPT) | Chain FORWARD (policy ACCEPT) | ||

target prot opt source destination | target prot opt source destination | ||

| - | |||

ACCEPT tcp -- anywhere anywhere state NEW | ACCEPT tcp -- anywhere anywhere state NEW | ||

ACCEPT all -- anywhere 192.168.122.0/24 state RELATED,ESTABLISHED | ACCEPT all -- anywhere 192.168.122.0/24 state RELATED,ESTABLISHED | ||

| Line 863: | Line 909: | ||

REJECT all -- anywhere anywhere reject-with icmp-port-unreachable | REJECT all -- anywhere anywhere reject-with icmp-port-unreachable | ||

ACCEPT all -- anywhere anywhere PHYSDEV match --physdev-in vif1.0 | ACCEPT all -- anywhere anywhere PHYSDEV match --physdev-in vif1.0 | ||

| + | ACCEPT all -- anywhere anywhere PHYSDEV match --physdev-in vif2.0 | ||

Chain OUTPUT (policy ACCEPT) | Chain OUTPUT (policy ACCEPT) | ||

| Line 891: | Line 938: | ||

</pre> | </pre> | ||

| - | Congratulations! Our rules are setup exactly as we expected them to be | + | Congratulations! Our rules are setup exactly as we expected them to be. Now, for the last time, we check if we are able to access the web-pages of our web-server on VM1 and VM2. |

<pre> | <pre> | ||

| Line 909: | Line 956: | ||

</pre> | </pre> | ||

| - | Excellent! So our solution works perfectly! | + | Excellent! So our solution for VM1 works perfectly! Lets try to access the web-service of VM2, which is reachable through port 8080 on our physical host. |

| + | |||

| + | <pre> | ||

| + | [kamran@kworkhorse tmp]$ wget http://192.168.1.201:8080 -O index.html ; cat index.html | ||

| + | --2011-02-15 17:12:55-- http://192.168.1.201:8080/ | ||

| + | Connecting to 192.168.1.201:8080... connected. | ||

| + | HTTP request sent, awaiting response... 200 OK | ||

| + | Length: 33 [text/html] | ||

| + | Saving to: “index.html” | ||

| + | |||

| + | 100%[================================================================================>] 33 --.-K/s in 0s | ||

| + | |||

| + | 2011-02-15 17:12:55 (2.82 MB/s) - “index.html” saved [33/33] | ||

| + | |||

| + | Webmail on VM2 inside a XEN host | ||

| + | [kamran@kworkhorse tmp]$ | ||

| + | </pre> | ||

| + | |||

| + | Great! We are able to access the web-service of VM2 as well! | ||

====Scenario 2: The Rich-Man's Setup, and The Solution==== | ====Scenario 2: The Rich-Man's Setup, and The Solution==== | ||

| - | In this setup, we have multiple public IPs for a single physical host. The extra IPs are configured on sub-interfaces of eth0 of the physical host. This setup allows more freedom. That is, each public IP can be DNATed to an individual VM with a private IP. Thus, the limitation of ports is also lifted. Now, each IP can have it's own port 80, 22, 25, 110, etc. This is exactly what is required by small hosting providers, who have servers provided by server rental companies like ServerBeach.com . | + | <b>Scenario:</b> In this setup, we have multiple public IPs for a single physical host. The extra IPs are configured on sub-interfaces of eth0 of the physical host. This setup allows more freedom. That is, each public IP can be DNATed to an individual VM with a private IP. Thus, the limitation of ports is also lifted. Now, each IP can have it's own port 80, 22, 25, 110, etc. This is exactly what is required by small hosting providers, who have servers provided by server rental companies like ServerBeach.com. |

| + | |||

| + | <b>Objective:</b> The VMs should be able to access the internet, for updates, etc. The VMs should also be accessible from the outside, using public IPs. VM1's webserver is serving a single/simple web page, index.html, having the text/content: "Private VM1 inside a XEN host" in it. This is what we should be able to see in our browser. VM2 is primarily running mail server components, such as Postfix and Dovecot. It also hosts a webmail software, squirrelmail, available through it's web-server running on standard port 80 and 443. For the time being, we are pulling a simple web-page from VM2, which has the content: "WebMail on VM2 inside a XEN host". | ||

| - | Configuration: | + | <b>Configuration:</b> In this setup, we have two VMs running inside our XEN host. Both are web servers, serving different content. VM1 has private IP 192.168.122.41 and VM2 has the private IP 192.168.122.42. The physical host has three (so called, public) IPs as follows: |

| - | In this setup, we have two VMs running inside our XEN host. Both are web servers, serving different content. VM1 has private IP 192.168.122.41 and VM2 has the private IP 192.168.122.42. The physical host has three (so called, public) IPs as follows: | + | |

| - | * eth0 | + | * eth0 192.168.1.201 (XEN physical host, NOT mapped to any VM) |

| - | * eth0:0 192.168.1.211 (VM1 public IP) | + | * eth0:0 192.168.1.211 (VM1 public IP, mapped to, 192.168.122.41 on VM1) |

| - | * eth0:1 192.168.1.212 (VM2 public IP) | + | * eth0:1 192.168.1.212 (VM2 public IP, mapped to, 192.168.122.42 on VM2) |

| - | Note: You can setup more sensible fixed IPs for your VMs. Our practice, and suggestion is, to keep the last octet same, in the public and private IPs. Such as 192.168.122.211 for VM1 and 192.168.122.212 for VM2. | + | : <b>Note:</b> You can setup more sensible fixed IPs for your VMs. Our practice, and suggestion is, to keep the last octet same, in the public and private IPs. Such as 192.168.122.211 for VM1 and 192.168.122.212 for VM2. |

Setting up a sub-interface is pretty easy. | Setting up a sub-interface is pretty easy. | ||

| Line 967: | Line 1,033: | ||

# by the libvirtd rules, you will need to add them in the rc.local or a script of your choice, | # by the libvirtd rules, you will need to add them in the rc.local or a script of your choice, | ||

# which should run after xend. | # which should run after xend. | ||

| - | # -A POSTROUTING -s 192.168.122.41 -d ! 192.168.122.0/255.255.255.0 -j SNAT --to-source 192.168.1. | + | # -A POSTROUTING -s 192.168.122.41 -d ! 192.168.122.0/255.255.255.0 -j SNAT --to-source 192.168.1.211 |

| - | # -A POSTROUTING -s 192.168.122.42 -d ! 192.168.122.0/255.255.255.0 -j SNAT --to-source 192.168.1. | + | # -A POSTROUTING -s 192.168.122.42 -d ! 192.168.122.0/255.255.255.0 -j SNAT --to-source 192.168.1.212 |

COMMIT | COMMIT | ||

[root@xenhost ~]# | [root@xenhost ~]# | ||

| Line 977: | Line 1,043: | ||